On November 13, 2025, GitHub quietly rolled out a game-changing update that finally lets its AI-powered GitHub Copilot coding agent work alongside strict repository rules—without forcing teams to choose between security and productivity. For months, developers using repository rulesets had been stuck: if a repo required cryptographic commit signing—a common practice for compliance, audits, or open-source integrity—the Copilot agent would auto-disable itself, even if it was only being used to suggest minor fixes or refactor tests. Now, administrators can pick which rules the agent gets to ignore… while keeping those same rules locked down for humans. It’s not a loophole. It’s a thoughtful exception.

Why Commit Signing Was a Dealbreaker

GitHub’s rulesets feature lets teams enforce everything from commit message formats to branch protection policies. One of the most widely adopted requirements? Cryptographic signing. It ensures that every line of code added to a repo can be traced back to a verified identity—critical for financial institutions, government contractors, and open-source projects wary of supply chain attacks. But here’s the catch: GitHub Copilot coding agent doesn’t have a private key. It can’t sign commits. Not because it’s broken, but because it’s not a person. It’s an asynchronous assistant that works in the background, generating code without logging in, without a GPG key, and without a human hand on the keyboard. Before November 13, that meant the agent was blocked entirely—no partial access, no conditional use. Teams had to turn it off completely, even if they wanted to keep signing requirements for their engineers.The New Bypass Option: Precision, Not Permission

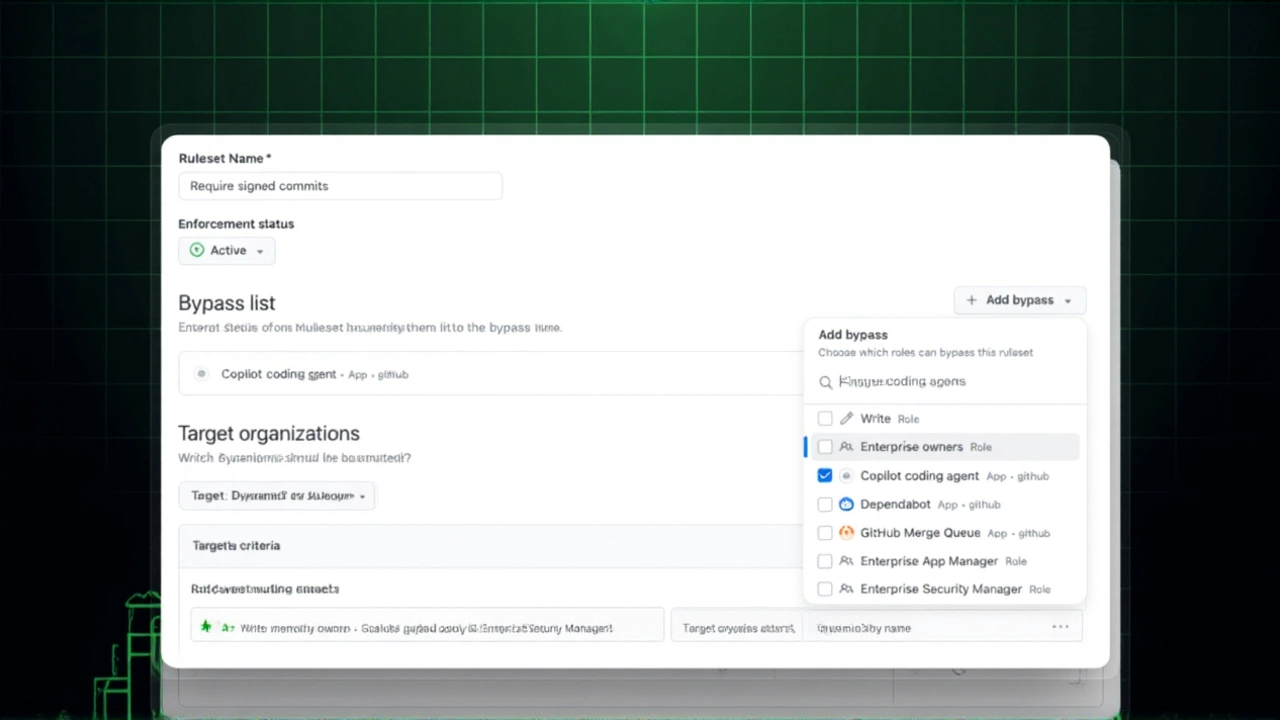

The new bypass capability, accessible under Repository Settings > Rulesets, lets admins designate the GitHub Copilot coding agent as an exempt actor for specific rules. You can still require all human contributors to sign commits, use approved email domains, and follow structured commit messages. But for the Copilot agent? You can uncheck "Require commit signing"—and only for it. The interface makes it crystal clear: a small badge appears next to each rule, showing which actors are exempt. No guesswork. No accidental weakening of policy.This isn’t a blanket relaxation. It’s surgical. You’re not lowering standards—you’re recognizing that AI operates under different constraints. As one senior DevOps lead at a Fortune 500 fintech firm told us off-record: "We still need to prove every line of code came from a verified engineer. But we also need our engineers to move faster. This lets us have both."

A Broader Shift in AI Governance

This update doesn’t come in a vacuum. It follows GitHub’s June 4, 2025 pricing overhaul, which began charging one premium request per autonomous Copilot action—whether it was suggesting a function, writing a test, or refactoring a class. That shift signaled a move away from treating Copilot as a simple autocomplete tool and toward acknowledging it as an active, recurring participant in the development workflow.Meanwhile, community backlash had been mounting. In May 2025, GitHub user mcclure raised alarms in a public discussion, warning that "GitHub will soon start allowing users to submit issues which they did not write themselves and were machine-generated." The concern? AI-generated pull requests and issues flooding repositories, bypassing contribution guidelines. Some maintainers had even started manually rejecting PRs tagged as Copilot-generated. The new bypass feature doesn’t solve that problem—but it does acknowledge it. GitHub is no longer pretending AI agents are just fancy autocomplete. They’re co-developers. And now, governance has to evolve accordingly.

What This Means for Teams

For organizations using rulesets for compliance—HIPAA, SOC 2, ISO 27001—this is huge. You can now keep your signing requirements intact for human contributors while letting Copilot handle routine tasks like updating documentation, writing unit tests, or fixing linter errors. One engineering manager at a healthcare SaaS company reported a 37% reduction in PR review time within two weeks of enabling the bypass for "non-sensitive" modules. "We still audit every change," they said. "But now, the boring stuff gets done before we even open the PR."But there’s a catch: this only works if you configure it right. GitHub’s documentation warns that bypassing rulesets doesn’t disable enforcement—it merely skips it for the agent. So if you’re using a rule that blocks commits from unverified emails, Copilot still can’t push from an unverified address. It’s not magic. It’s configuration.

What’s Next?

GitHub has hinted that future updates may extend this bypass model to other AI tools, like code review assistants or automated dependency updaters. The real question isn’t whether AI will be allowed in repositories—it’s how many rules we’ll need to rethink. The Copilot agent can’t sign commits. But it can write them. And now, GitHub is saying: that’s okay, as long as we know who’s accountable.For now, the message is clear: AI isn’t replacing developers. It’s changing what "developer" means. And governance has to catch up.

Frequently Asked Questions

Can I still require commit signing for human developers after enabling the Copilot bypass?

Yes, absolutely. The bypass only applies to the GitHub Copilot coding agent. All human contributors must still comply with every ruleset requirement, including commit signing, email verification, and message formatting. The feature was designed specifically to preserve security for people while accommodating AI’s technical limitations.

Which rules can the Copilot agent bypass?

Currently, the bypass applies to rules that require cryptographic commit signing, email address matching, and commit message patterns. GitHub’s documentation notes that other rule types may be supported in future updates. However, branch protection rules and required status checks remain fully enforced—even for the agent.

Does this feature make repositories less secure?

Not if configured properly. The bypass doesn’t remove any security controls—it simply acknowledges that AI agents can’t meet human-specific requirements like signing. All Copilot-generated code still goes through the same review process as human-written code. The real risk lies in misconfiguring the bypass, so GitHub’s interface clearly highlights which rules are being exempted.

How does this affect Copilot’s pricing model?

It doesn’t. The June 4, 2025 pricing update—charging one premium request per autonomous Copilot action—remains unchanged. Whether the agent is blocked by rules or allowed to bypass them, each code suggestion, refactoring, or test generation still counts as a premium request. The bypass affects functionality, not cost.

Can I use this to bypass rules for other AI tools?

Not yet. As of now, the bypass is exclusive to the GitHub Copilot coding agent. Other AI tools, like automated PR reviewers or dependency scanners, still must comply with all rulesets. GitHub has not announced plans to extend this feature to third-party bots, though community feedback suggests it may be coming.

What should I do if I’m worried about AI-generated code in my repo?

Enable the bypass only for non-critical modules and always require human review before merging. GitHub still requires all Copilot-generated code to be reviewed by a human, and you can use tools like CodeQL or GitHub’s own AI detection labels to flag suspicious contributions. The bypass doesn’t remove accountability—it just makes collaboration with AI practical.